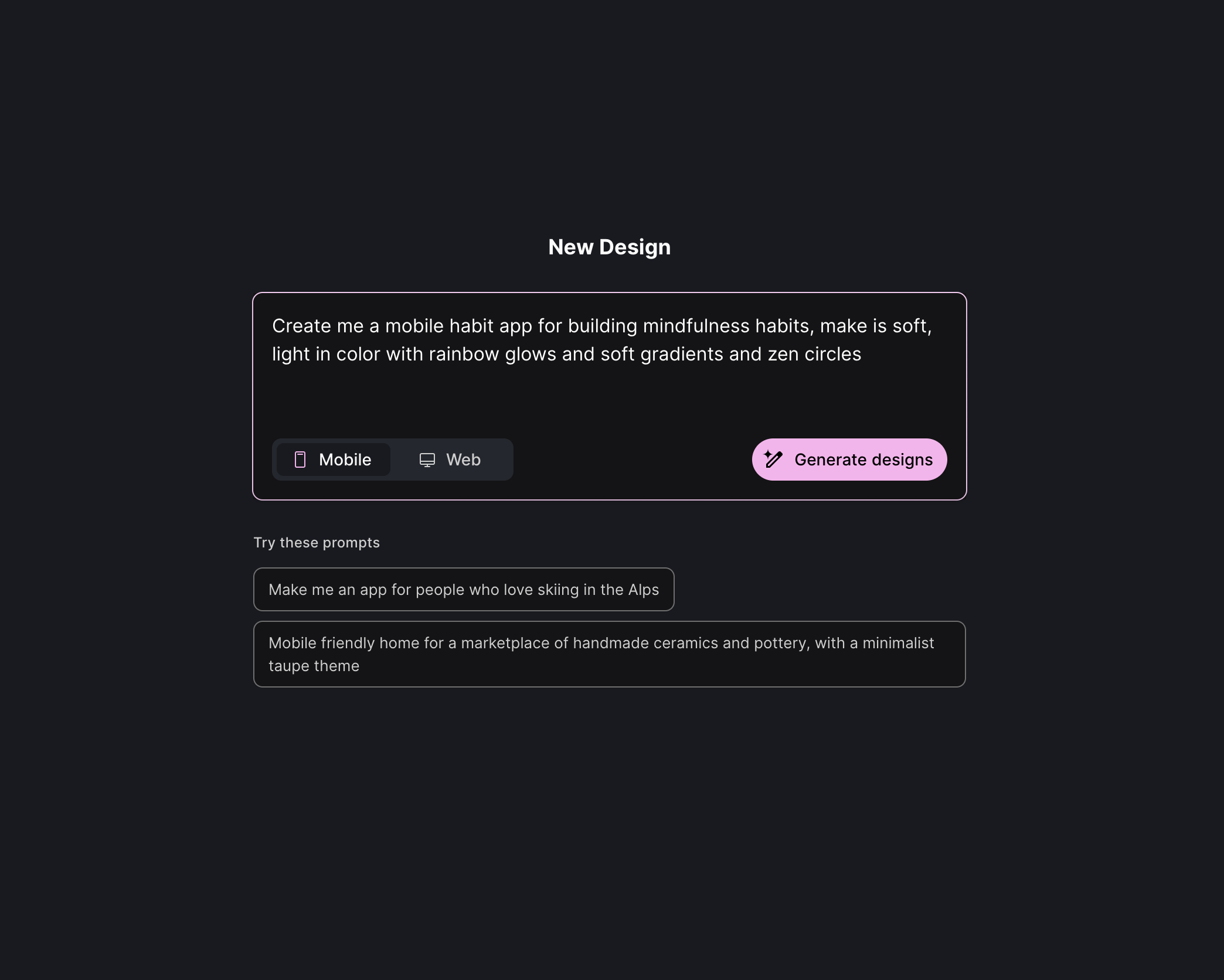

I just asked Google to make me a UI design—and it did. But is it any good?

At I/O 2025 the company Google unveiled Stitch, a Gemini-powered tool that promises to turn “ideas into UI designs and front-end code in minutes.” As a product (UX/UI) designer of 20 years, I wanted to see how this latest flavor of Text to UI generation tool would stack up.

I started off by using a simple prompt for a mobile app.

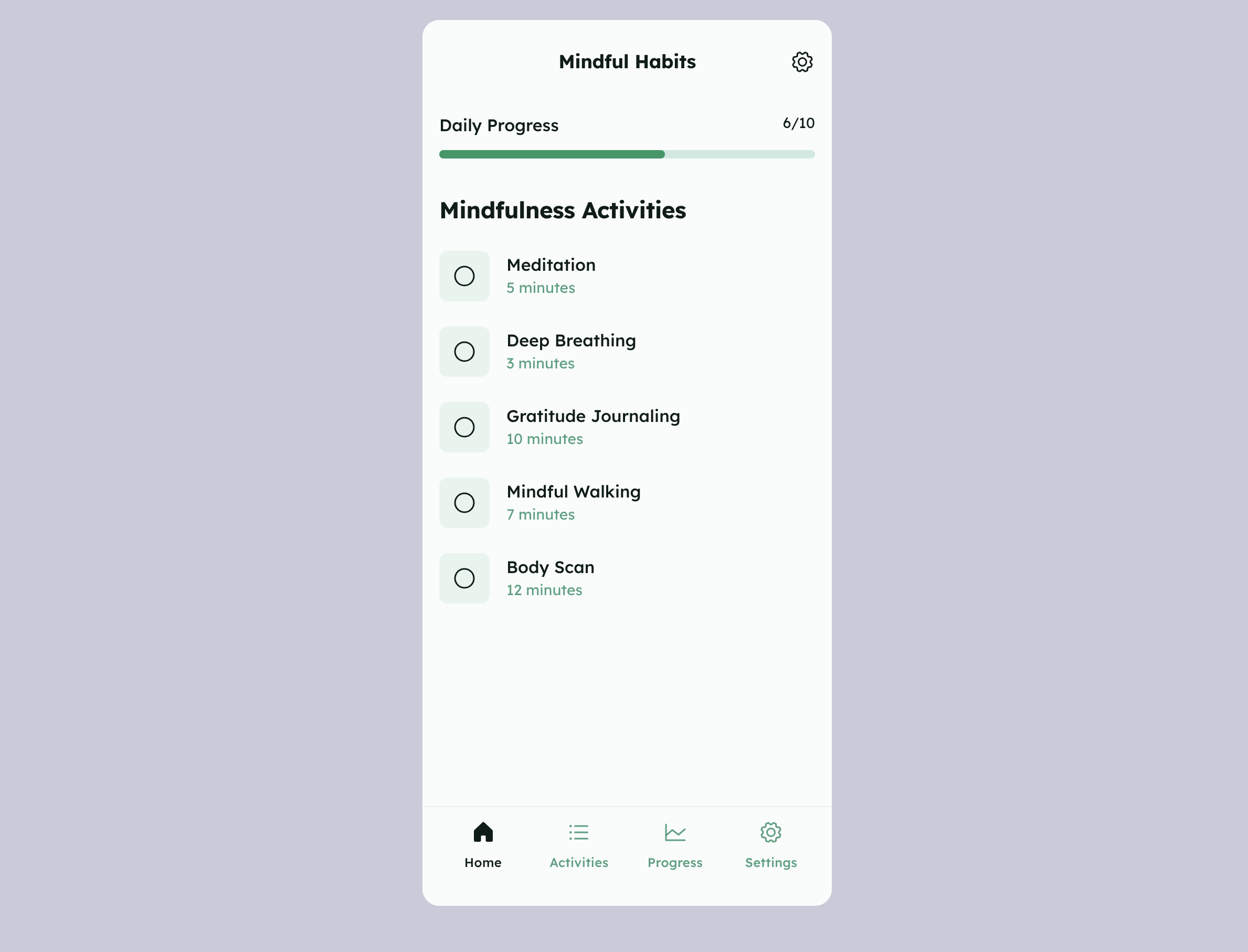

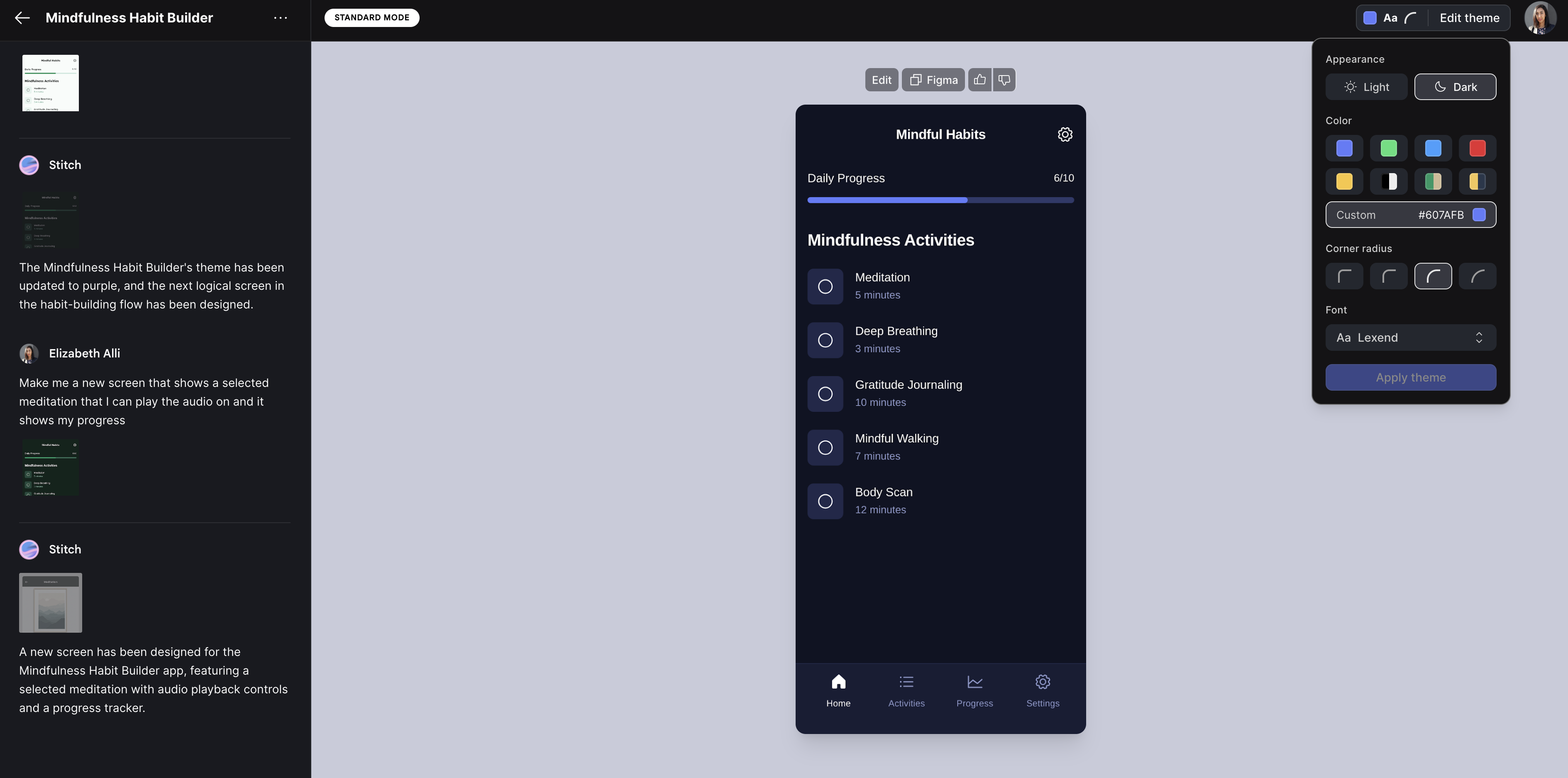

This is what it made:

It missed the mark on some of the stylized things that I asked it to do, but it’s clean otherwise. I can’t click around on it and do anything; it seems to have generated only one screen.

2. Editing

↳Using a Prompt

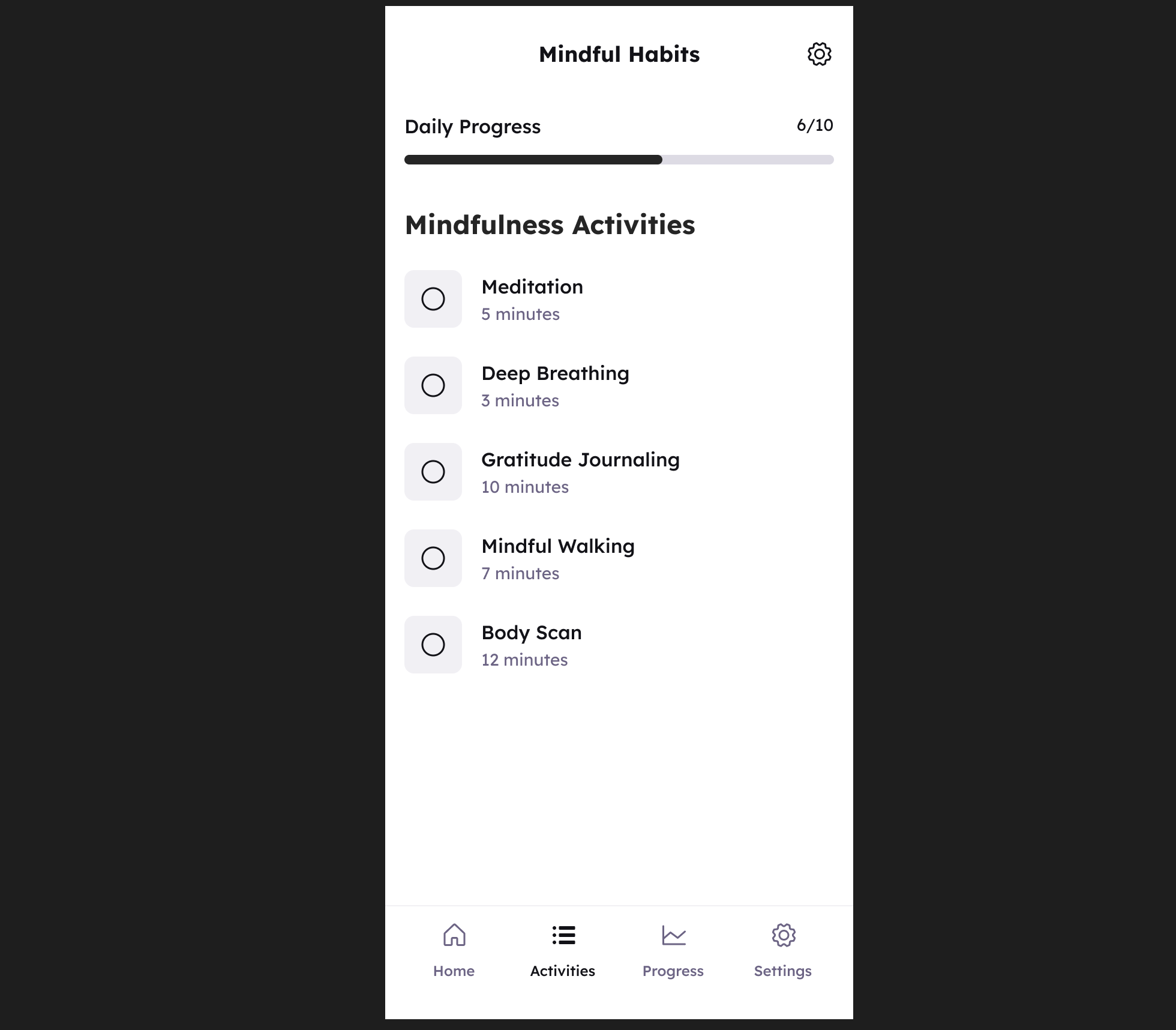

You can click Edit, and what it does is drop that screen into a text-prompt area. and asks you again to describe your design. Let’s see if it can do multiple screens. This time I said, “Make it purple and make me the next logical screen.” You can see Stitch working in the text chat area. Generally, I found my prompts tool about 2-3 minutes to generate. So it adjusted it to what seemed to be the very faintest purple tint (almost imperceptible) and made the primary color a dark gray, not quite the vivid purple hue I had in mind. It also did not in fact generate me the next logical screen or any other screen.

↳Using the Editing Options

There is also an option to edit a few elements using the edit modal in the top right corner. Not many options to choose from just a few colors, a color picker, a handful of Google Fonts, and a few corner radius options. (To be honest, I was anticipating this tying into Google's new Material Design 3 Expressive Design System or something far more advanced, creative or interesting than this very basic, boring screen.)

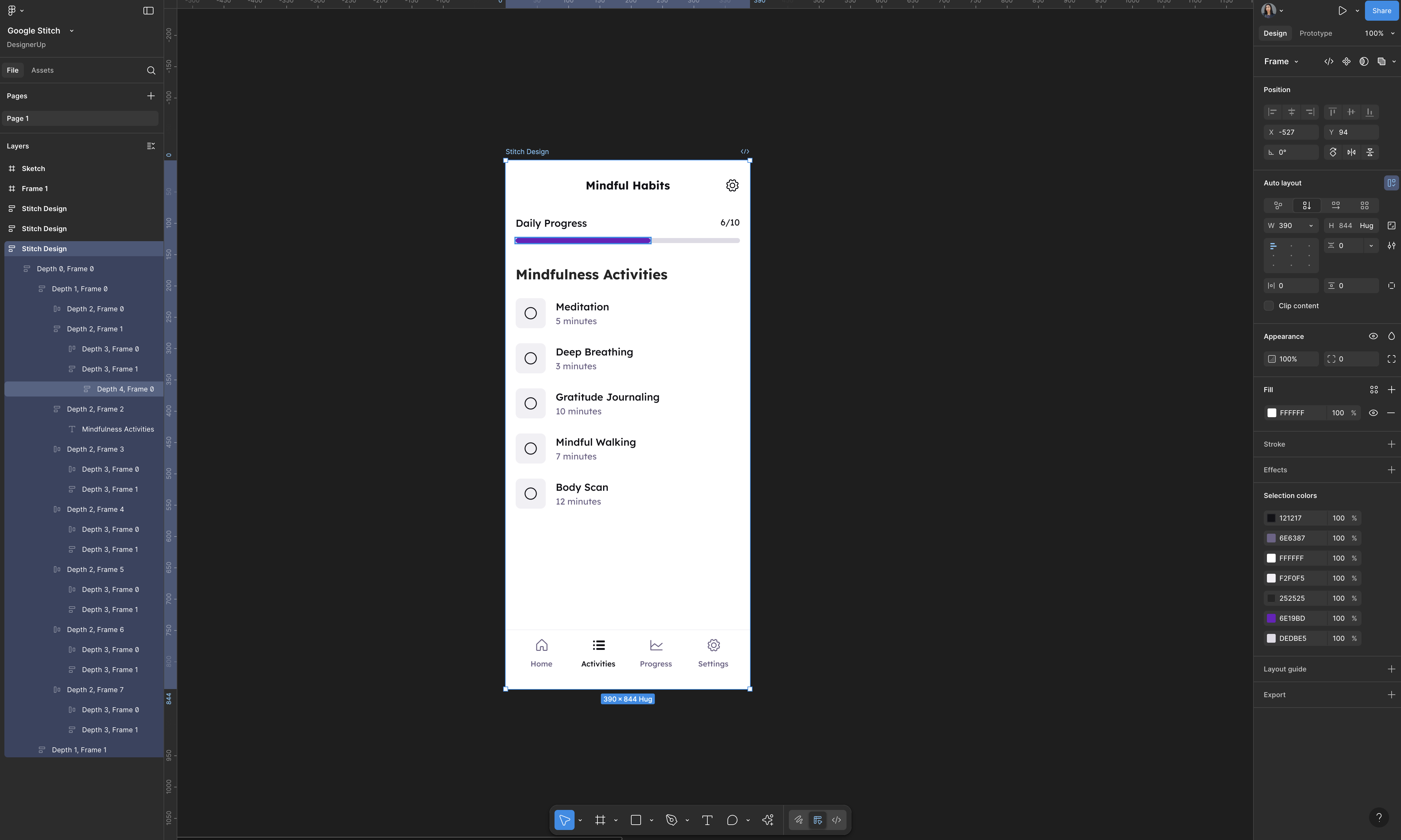

3. Importing into Figma (with Auto Layout)

What is interesting is you are able to bring this into Figma. I clicked the Copy to Figma button and pasted it into my Figma Canvas and it dropped it in with all the nested layers and Auto Layout. This made it pretty easy to work with and I could finally get that purple I really wanted.

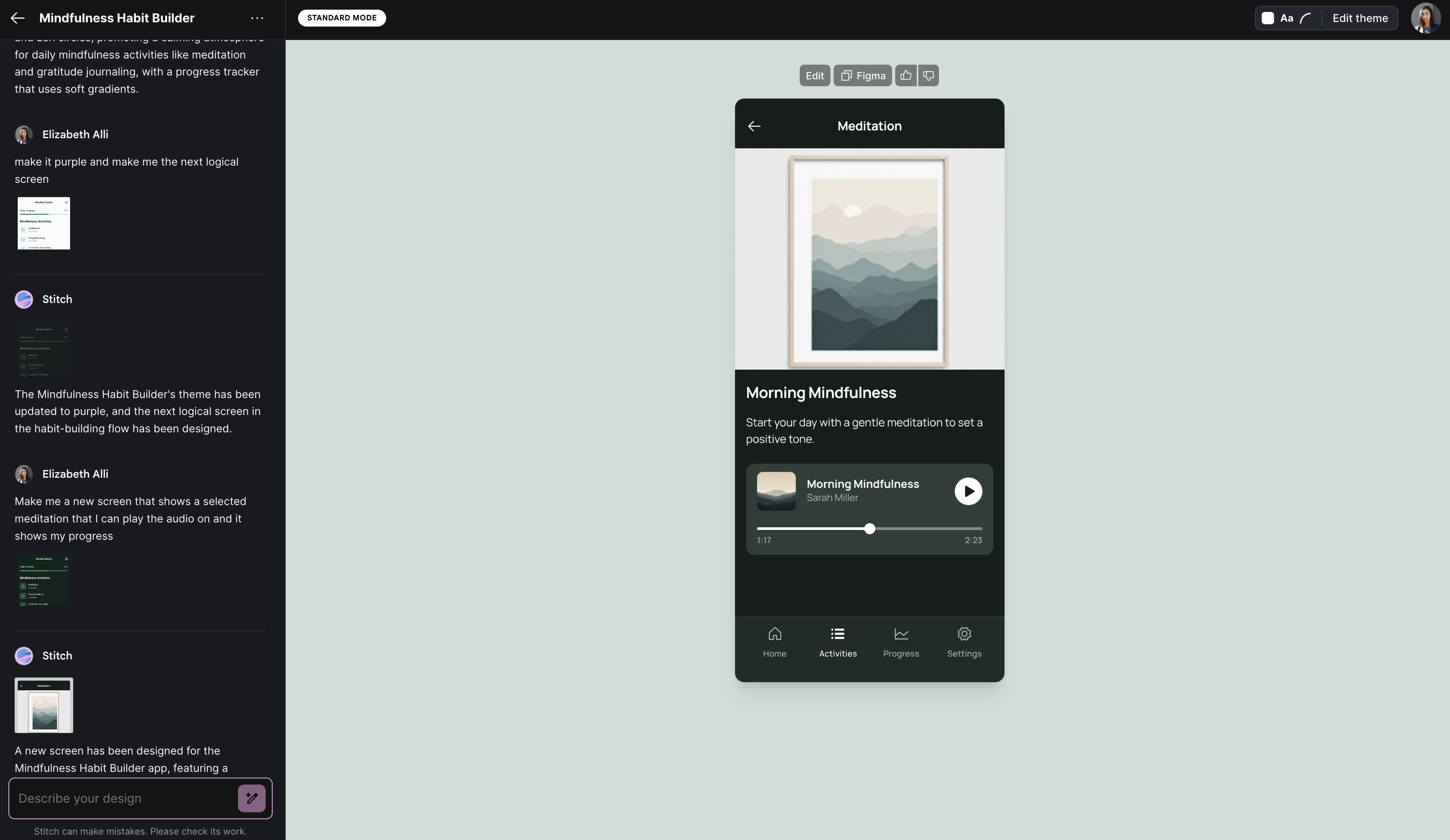

4. Generating another screen

I wanted to prompt the next screen in the flow. So imagining if we were to click on that first 5 Minute Meditation from the list, I asked it to:

That kind of worked—it made the next screen and it is relatively clean (safe for the terribly aligned, framed photo that it also generated). The colors are a little off and don’t exactly match the previous screen.

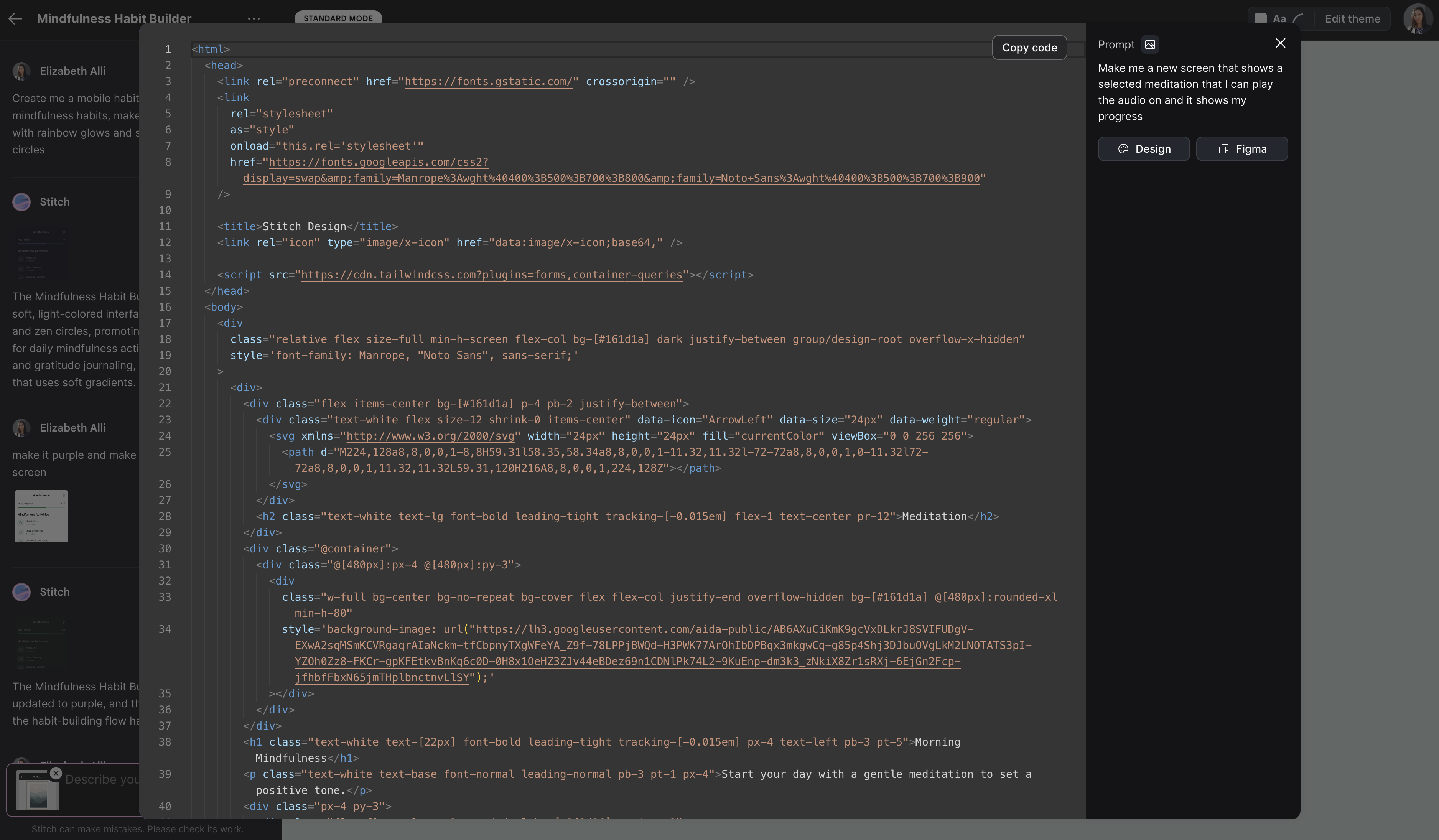

5. Exporting the Code

I found it interesting that you could export the Code. I'm not sure how good the code is, but it is code. Of course, you still need to implement this properly, figure out all the other screens and how to tie it all together with a back-end, but it seems to give you at least some structure to start with (which I'm not sure is better or worse).

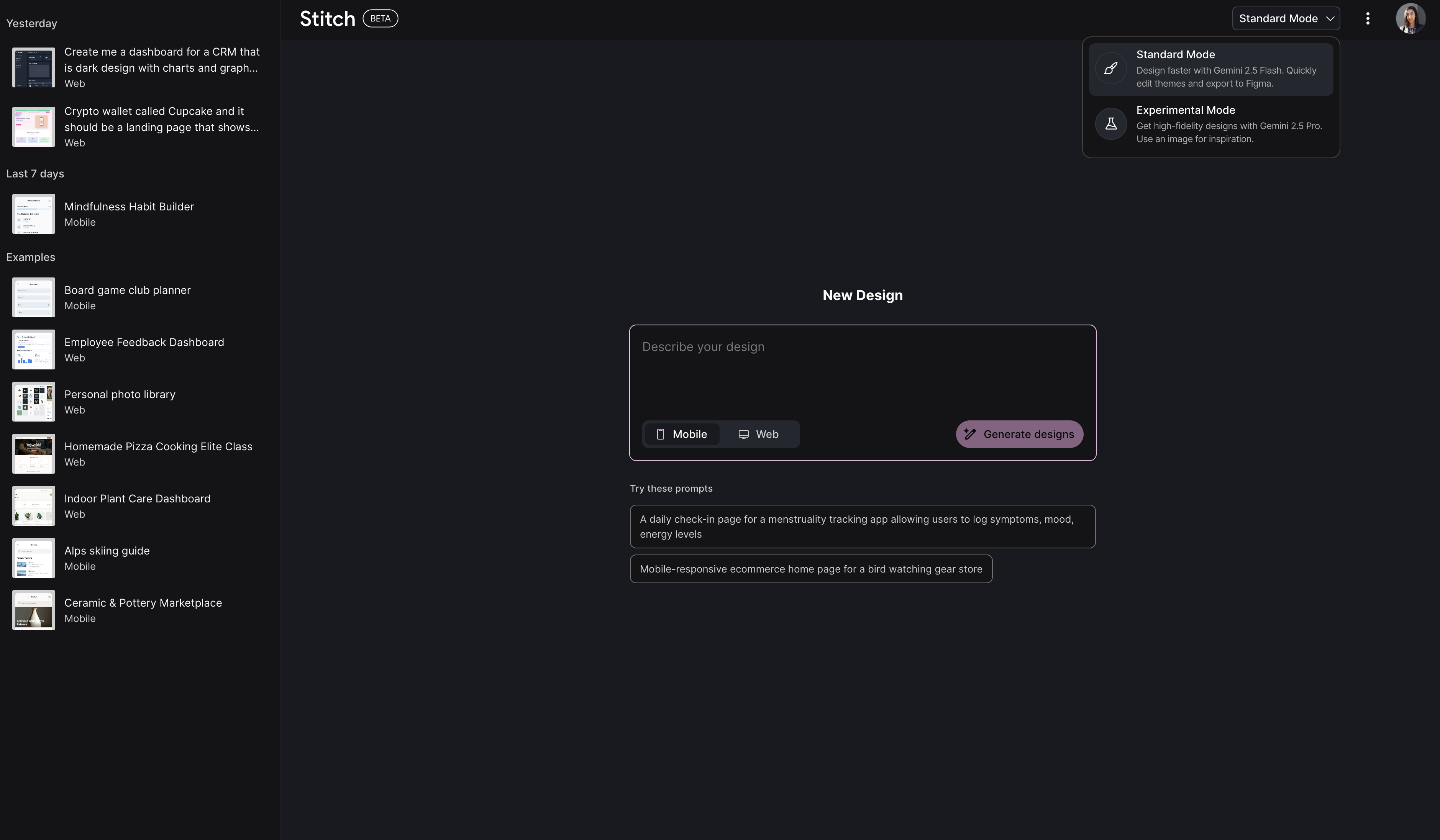

6. Switching to Experimental Mode

All of this was done in 'Standard Mode' which runs on Gemini 2.5 Flash. You can also switch to 'Experimental Mode', which uses Gemini Pro and lets you generate UI designs in a few other ways:

↳ From an image

↳ From a wireframe

↳ From a mockup

I flipped over to 'Web' in order to generate a website design this time and clicked the Image icon to upload an image for inspiration

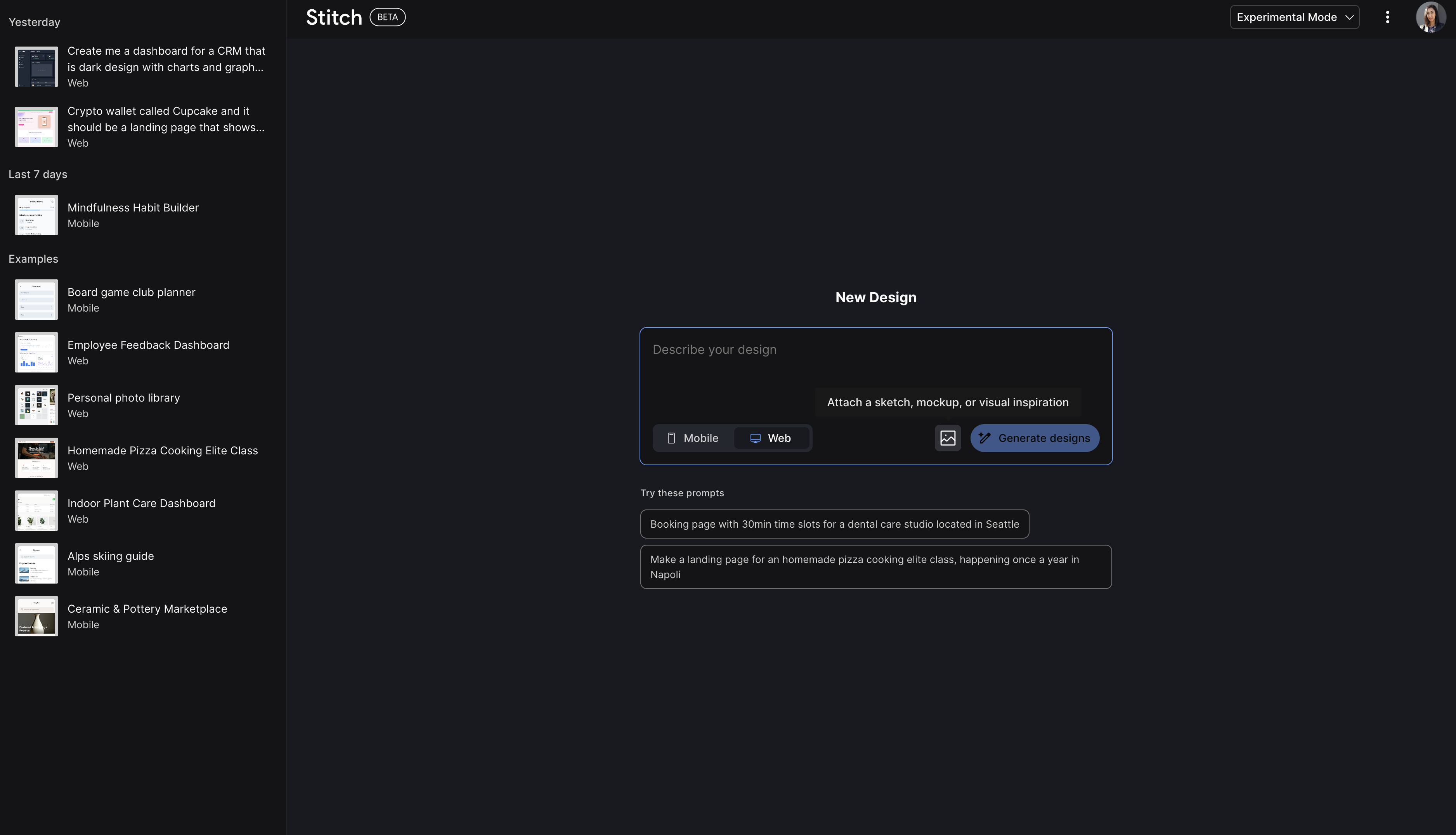

7. Generating a UI from an Image

I uploaded a website I had designed and described what I wanted: “A crypto wallet called Cupcake—make it a landing page.” After clicking Generate Designs, this is what it produced—and I was not impressed. The right side was cut off, sections didn’t go full-width, the typography was bad, the color combinations were worse, and the shadows and icons looked dated.

There is also no option to export to Figma; it states 'Figma export is not available yet with the upload-image feature'. You could view the code, but do you even want to? Experimental is an overstatement.

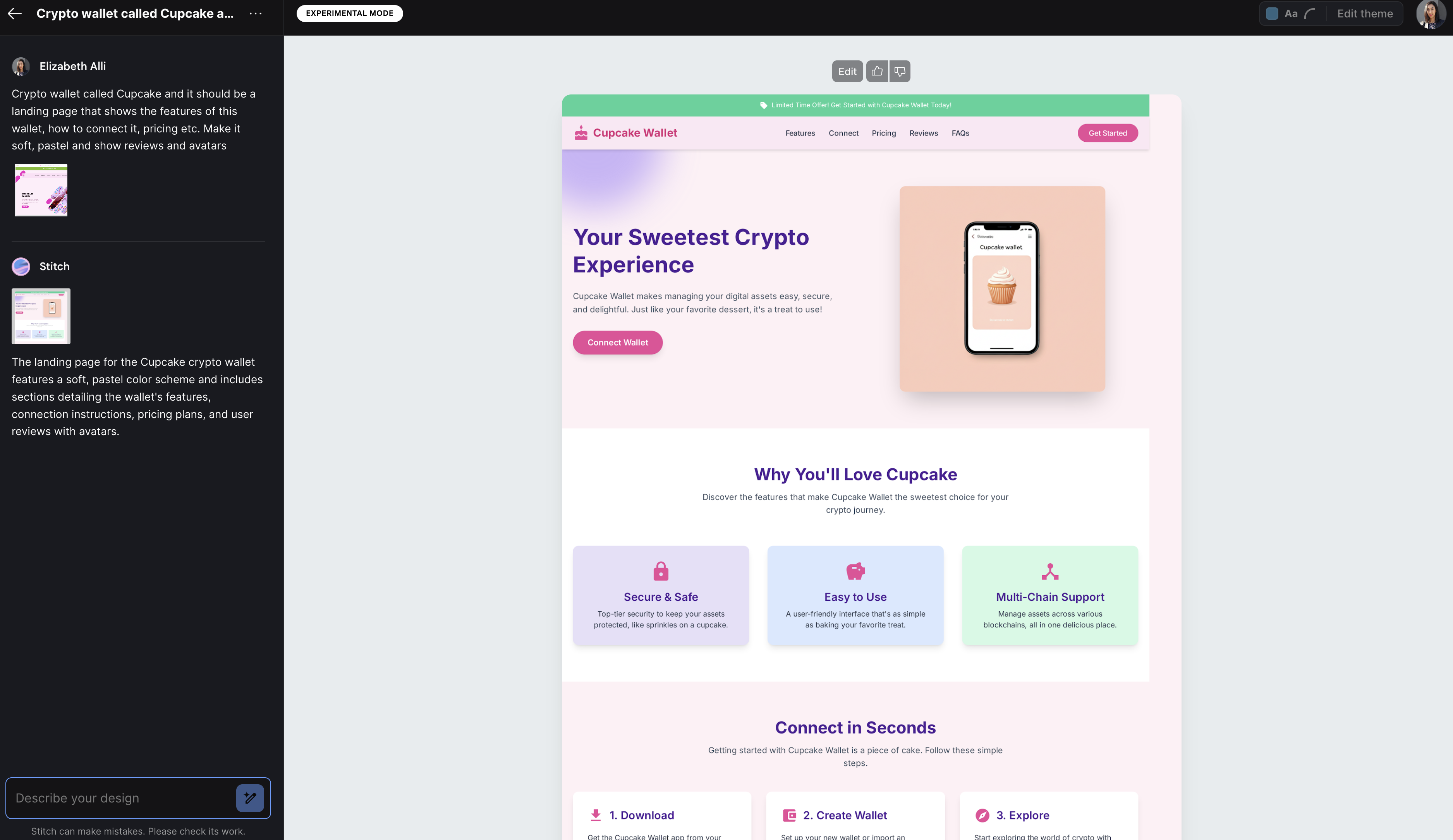

8. Generating a UI from a Sketch

Next, I took a quick sloppily drawn wireframe sketch that I made in Figma Draw and asked it to

Stitch generated the design and asked if I wanted any changes. It was pretty clean and included most of what I had requested, but it was cut off with only one table row visible. The icons weren’t centered, there were grouping and alignment issues, and there were no actual charts.

There seems to be a lot of design knowledge and best practices that are left unconsidered in these renderings and mostly random approximation of what is intended. Even with more specific prompting I didn't get much improvement in the result. If I were to turn this into something useful I would still need to reference existing design patterns and think through lots of flow and interaction pieces and aesthetic choices.

9. Final Thoughts

I had much higher expectations from Google given that there are already so many existing AI to UI design generation tools on the market that do this much better. Their effort seems half-baked at best. Although it is in Beta, it does not seem to have anywhere near the polished output of Figma's First Draft or Uizard's Autodesigner for example. This release seems like a bit of a mad dash to throw their hat into the ring of the AI UI design hype and it's hard to tell who this is for exactly (beginners? non-designers? pro-designers?) and what they would do with it.

If you're just starting out as a designer, I know that it can be tempting to vibe design your way into creating a website and UI's but be careful of the risk of being solution-focused rather than problem-focused. There is also still a level of discernment and taste that needs to be developed to identify good and useful output and whether or not it is actually solving a problem for your users, and that insight only comes from talking to your users, knowing the right design patterns to use, the business model and the tech stack that underpins your designs. That's exactly what I teach my students in my course and, at least for now, it's clear that it's not something that AI is capable of generating away.

💬 What do you think? Would you use it and do you think it has potential for future improvement?